Build and deploy a UI for your generative AI applications with AWS and Python

AWS Machine Learning - AI

NOVEMBER 6, 2024

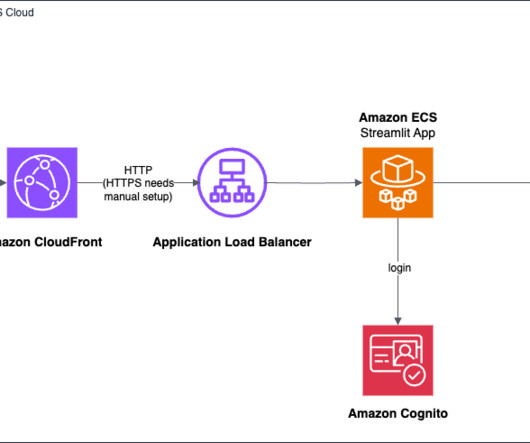

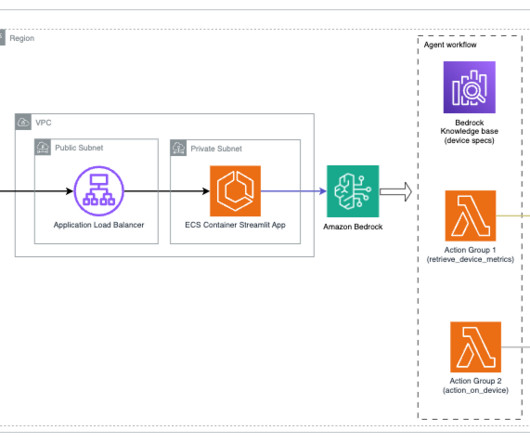

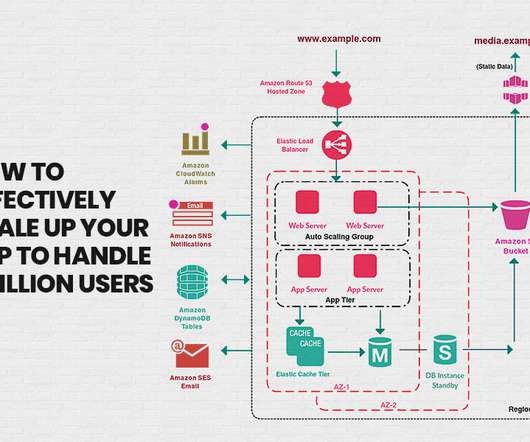

The custom header value is a security token that CloudFront uses to authenticate on the load balancer. By using Streamlit and AWS services, data scientists can focus on their core expertise while still delivering secure, scalable, and accessible applications to business users. Choose a different stack name for each application.

Let's personalize your content