Can we trust Google Cloud Load Balancing?

Xebia

APRIL 12, 2023

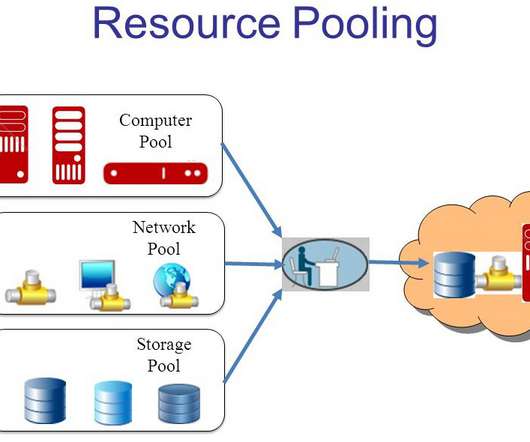

With Cloud getting a more prominent place in the digital world and with that Cloud Service Providers (CSP), it triggered the question on how secure our data with Google Cloud actually is when looking at their Cloud Load Balancing offering. During threat modelling, the SSL Load Balancing offerings often come into the picture.

Let's personalize your content