Build and deploy a UI for your generative AI applications with AWS and Python

AWS Machine Learning - AI

NOVEMBER 6, 2024

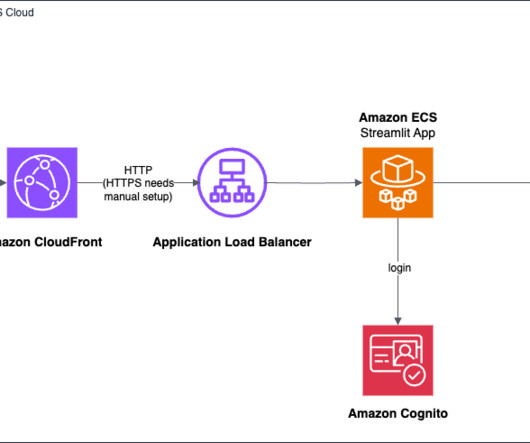

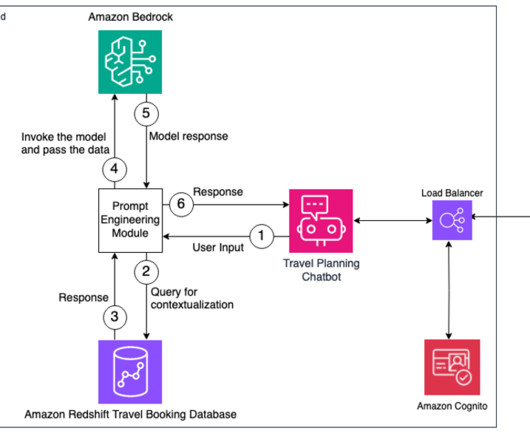

The custom header value is a security token that CloudFront uses to authenticate on the load balancer. Prerequisites As a prerequisite, you need to enable model access in Amazon Bedrock and have access to a Linux or macOS development environment. Choose a different stack name for each application. See the README.md

Let's personalize your content