Build a multi-tenant generative AI environment for your enterprise on AWS

AWS Machine Learning - AI

NOVEMBER 7, 2024

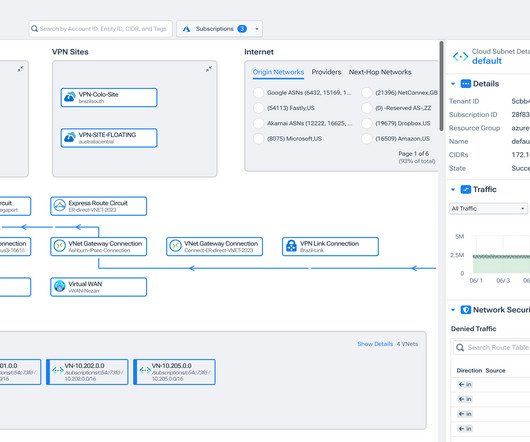

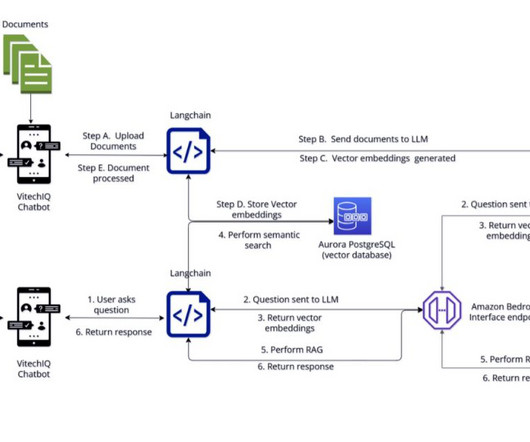

Shared components refer to the functionality and features shared by all tenants. Load balancer – Another option is to use a load balancer that exposes an HTTPS endpoint and routes the request to the orchestrator. You can use AWS services such as Application Load Balancer to implement this approach.

Let's personalize your content