Multi-LLM routing strategies for generative AI applications on AWS

AWS Machine Learning - AI

APRIL 9, 2025

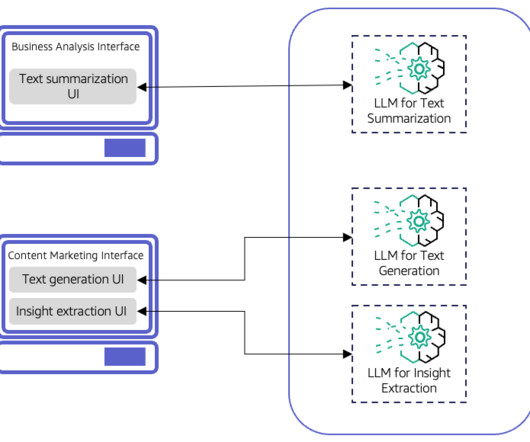

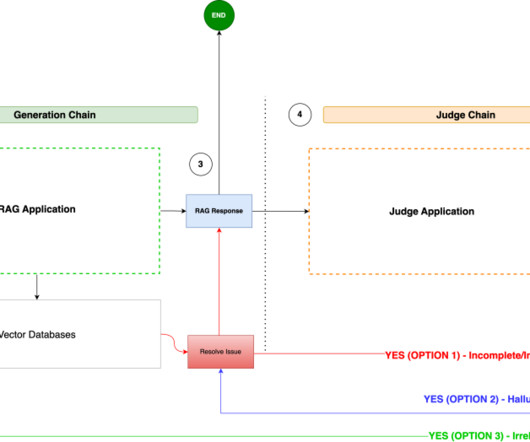

For instance, consider an AI-driven legal document analysis system designed for businesses of varying sizes, offering two primary subscription tiers: Basic and Pro. It also allows for a flexible and modular design, where new LLMs can be quickly plugged into or swapped out from a UI component without disrupting the overall system.

Let's personalize your content