Vitech uses Amazon Bedrock to revolutionize information access with AI-powered chatbot

AWS Machine Learning - AI

MAY 30, 2024

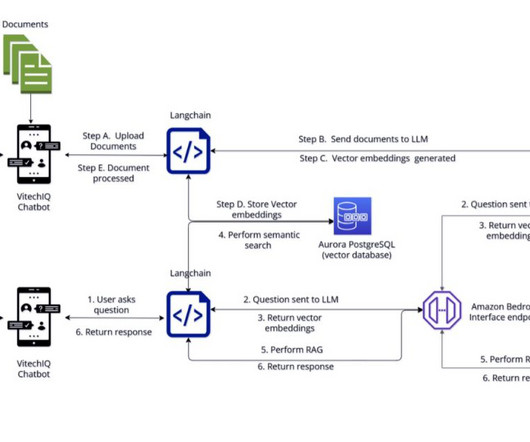

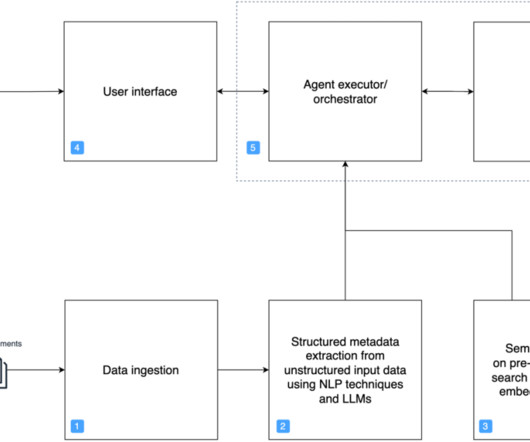

Retrieval Augmented Generation vs. fine tuning Traditional LLMs don’t have an understanding of Vitech’s processes and flow, making it imperative to augment the power of LLMs with Vitech’s knowledge base. Additionally, Vitech uses Amazon Bedrock runtime metrics to measure latency, performance, and number of tokens. “We

Let's personalize your content