Build a multi-tenant generative AI environment for your enterprise on AWS

AWS Machine Learning - AI

NOVEMBER 7, 2024

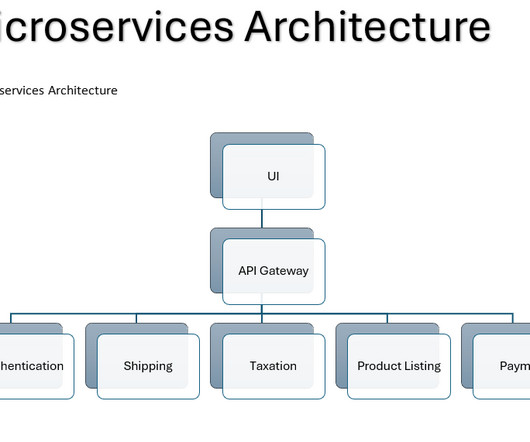

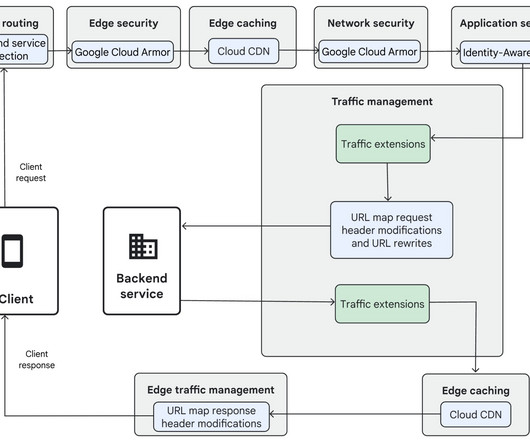

Each component in the previous diagram can be implemented as a microservice and is multi-tenant in nature, meaning it stores details related to each tenant, uniquely represented by a tenant_id. This in itself is a microservice, inspired the Orchestrator Saga pattern in microservices. API Gateway also provides a WebSocket API.

Let's personalize your content