Accelerate AWS Well-Architected reviews with Generative AI

AWS Machine Learning - AI

MARCH 4, 2025

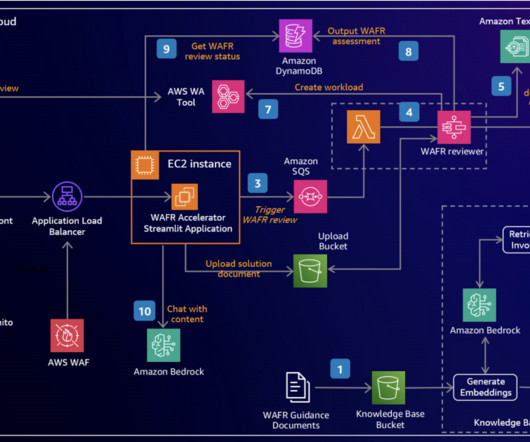

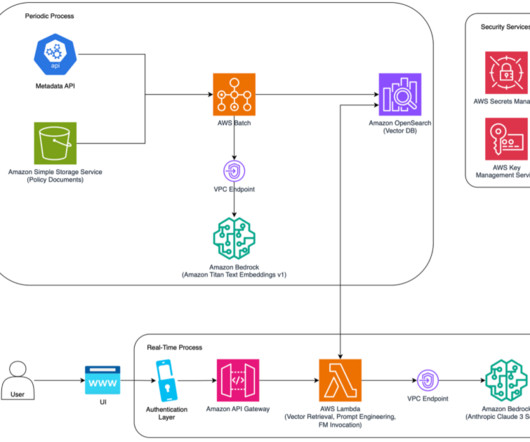

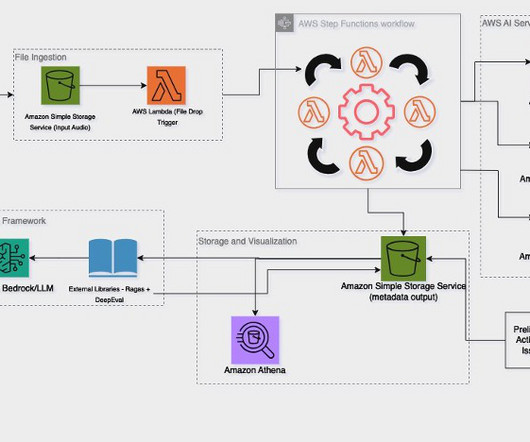

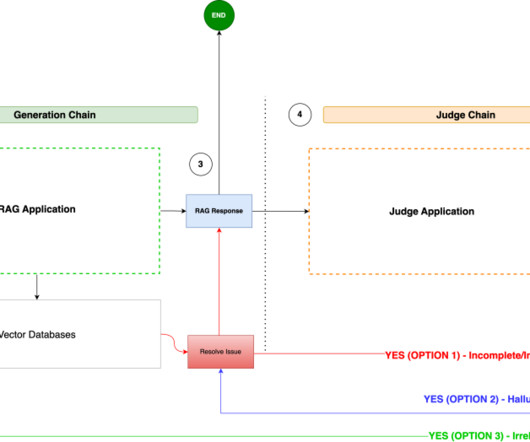

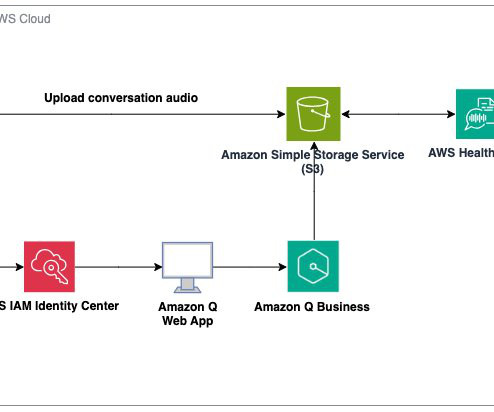

In this post, we explore a generative AI solution leveraging Amazon Bedrock to streamline the WAFR process. We demonstrate how to harness the power of LLMs to build an intelligent, scalable system that analyzes architecture documents and generates insightful recommendations based on AWS Well-Architected best practices.

Let's personalize your content