Multi-LLM routing strategies for generative AI applications on AWS

AWS Machine Learning - AI

APRIL 9, 2025

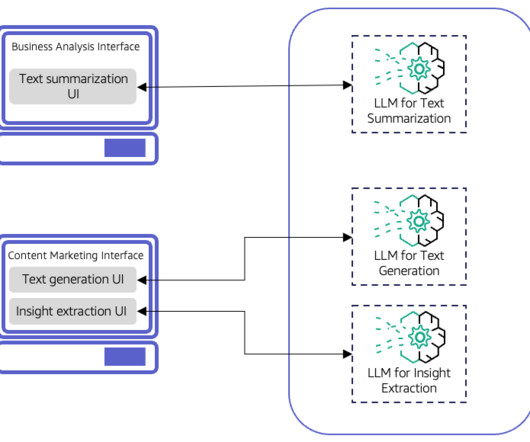

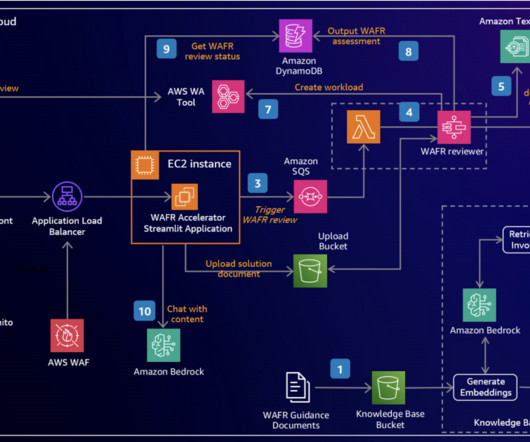

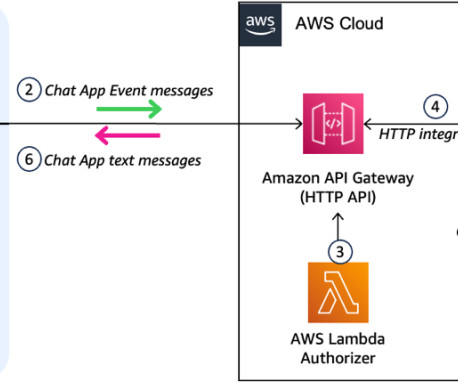

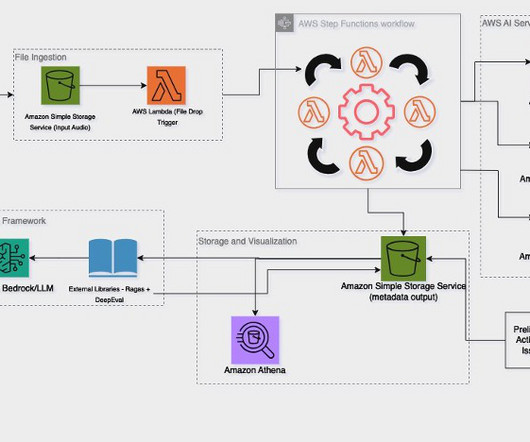

Organizations are increasingly using multiple large language models (LLMs) when building generative AI applications. Software-as-a-service (SaaS) applications with tenant tiering SaaS applications are often architected to provide different pricing and experiences to a spectrum of customer profiles, referred to as tiers.

Let's personalize your content