Revolutionizing customer service: MaestroQA’s integration with Amazon Bedrock for actionable insight

AWS Machine Learning - AI

MARCH 13, 2025

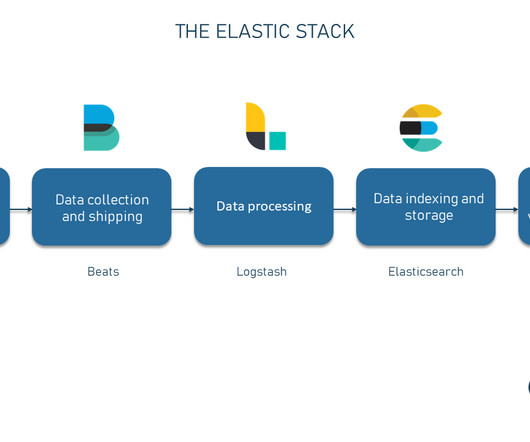

When customers receive incoming calls at their call centers, MaestroQA employs its proprietary transcription technology, built by enhancing open source transcription models, to transcribe the conversations. A lending company uses MaestroQA to detect compliance risks on 100% of their conversations.

Let's personalize your content