Host concurrent LLMs with LoRAX

AWS Machine Learning - AI

APRIL 16, 2025

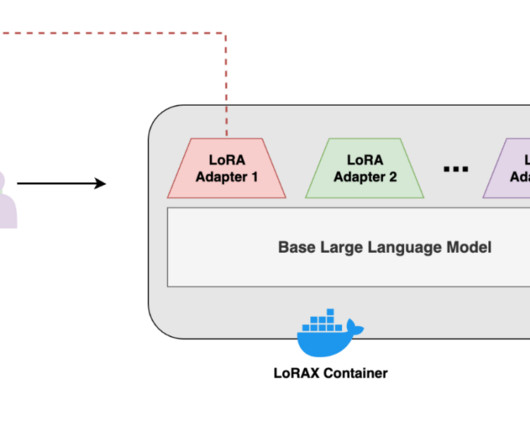

Furthermore, LoRAX supports quantization methods such as Activation-aware Weight Quantization (AWQ) and Half-Quadratic Quantization (HQQ) Solution overview The LoRAX inference container can be deployed on a single EC2 G6 instance, and models and adapters can be loaded in using Amazon Simple Storage Service (Amazon S3) or Hugging Face.

Let's personalize your content