Transforming workloads: Harnessing AI within VMware environments

CIO

APRIL 9, 2025

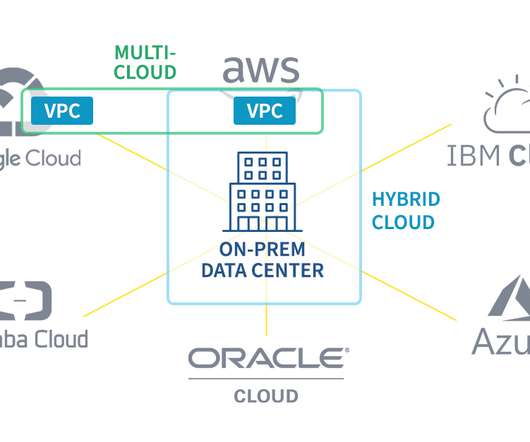

As a result, many IT leaders face a choice: build new infrastructure to create and support AI-powered systems from scratch or find ways to deploy AI while leveraging their current infrastructure investments. Infrastructure challenges in the AI era Its difficult to build the level of infrastructure on-premises that AI requires.

Let's personalize your content