No-code business intelligence service y42 raises $2.9M seed round

TechCrunch

MARCH 22, 2021

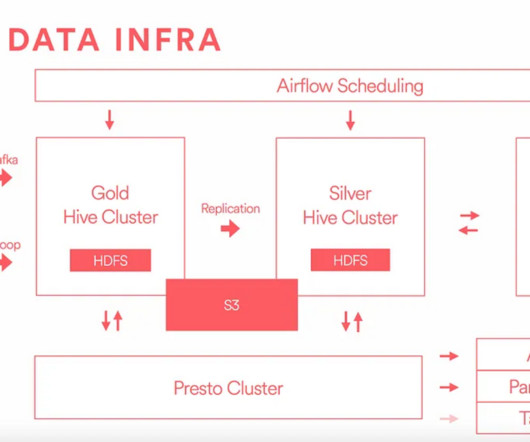

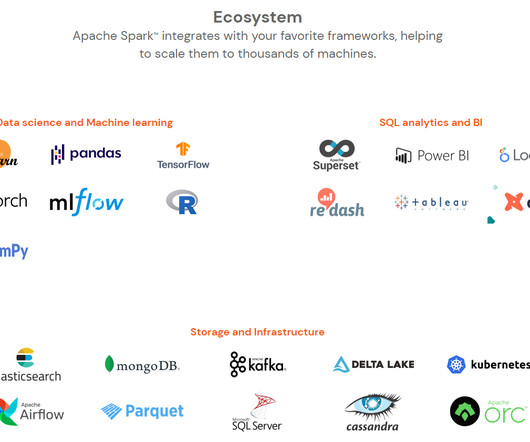

Berlin-based y42 (formerly known as Datos Intelligence), a data warehouse-centric business intelligence service that promises to give businesses access to an enterprise-level data stack that’s as simple to use as a spreadsheet, today announced that it has raised a $2.9 y42 founder and CEO Hung Dang.

Let's personalize your content