Build and deploy a UI for your generative AI applications with AWS and Python

AWS Machine Learning - AI

NOVEMBER 6, 2024

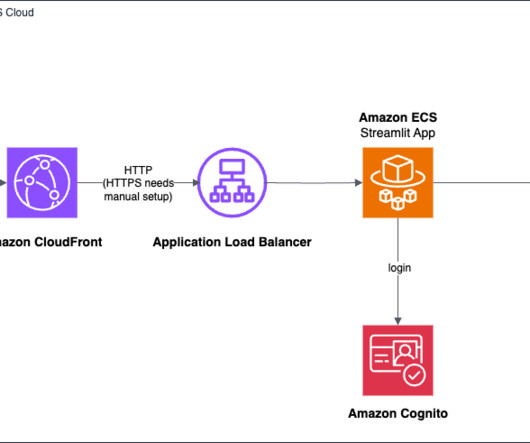

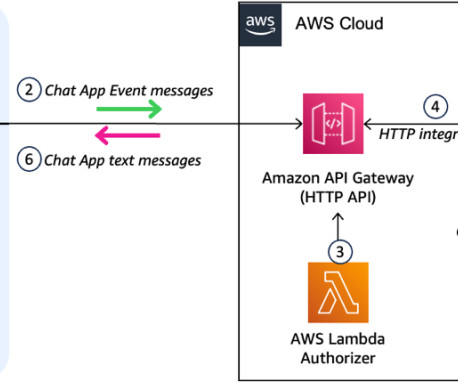

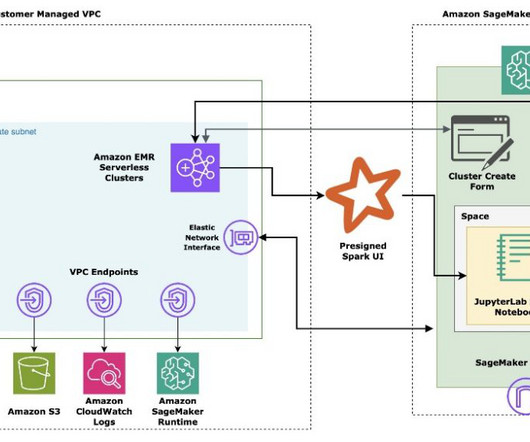

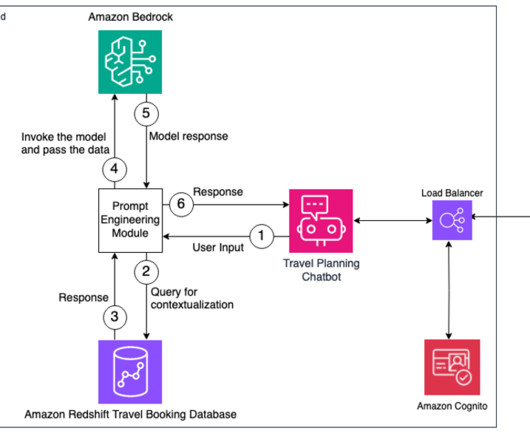

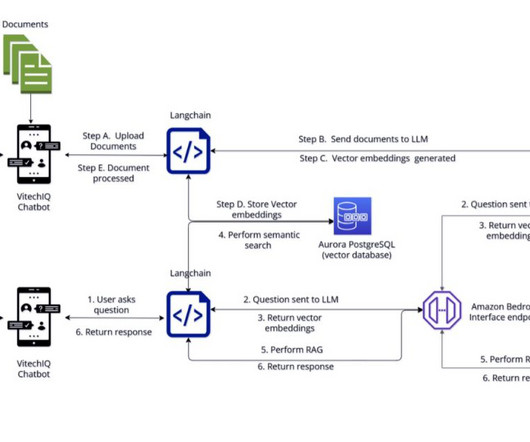

AWS provides a powerful set of tools and services that simplify the process of building and deploying generative AI applications, even for those with limited experience in frontend and backend development. The AWS deployment architecture makes sure the Python application is hosted and accessible from the internet to authenticated users.

Let's personalize your content