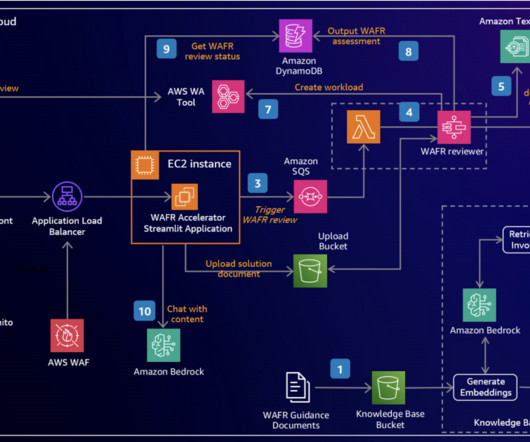

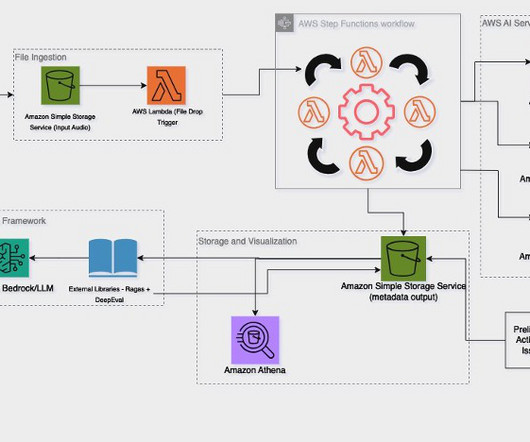

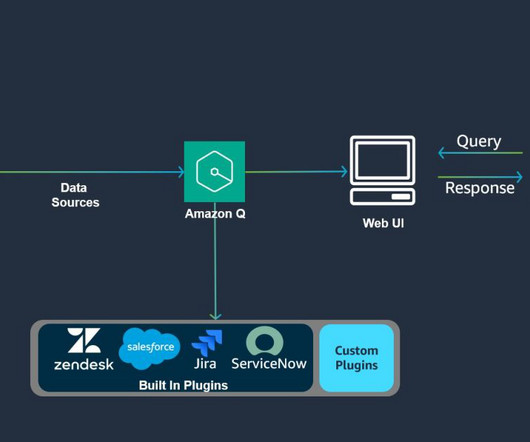

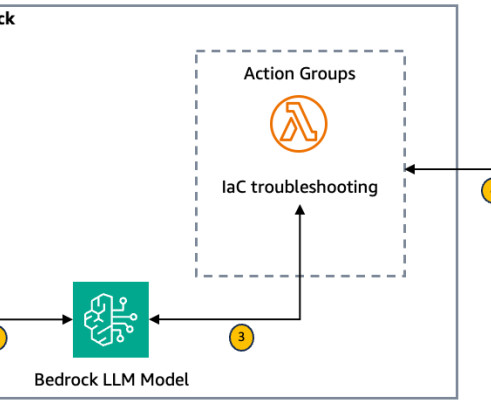

Accelerate AWS Well-Architected reviews with Generative AI

AWS Machine Learning - AI

MARCH 4, 2025

Building cloud infrastructure based on proven best practices promotes security, reliability and cost efficiency. To achieve these goals, the AWS Well-Architected Framework provides comprehensive guidance for building and improving cloud architectures. This systematic approach leads to more reliable and standardized evaluations.

Let's personalize your content