Deploy Meta Llama 3.1-8B on AWS Inferentia using Amazon EKS and vLLM

AWS Machine Learning - AI

NOVEMBER 26, 2024

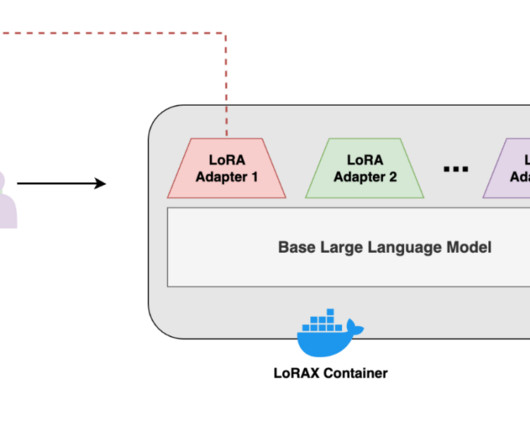

there is an increasing need for scalable, reliable, and cost-effective solutions to deploy and serve these models. AWS Trainium and AWS Inferentia based instances, combined with Amazon Elastic Kubernetes Service (Amazon EKS), provide a performant and low cost framework to run LLMs efficiently in a containerized environment.

Let's personalize your content