Build and deploy a UI for your generative AI applications with AWS and Python

AWS Machine Learning - AI

NOVEMBER 6, 2024

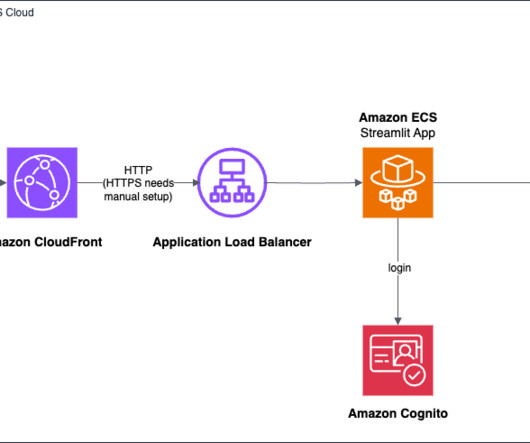

In this post, we explore a practical solution that uses Streamlit , a Python library for building interactive data applications, and AWS services like Amazon Elastic Container Service (Amazon ECS), Amazon Cognito , and the AWS Cloud Development Kit (AWS CDK) to create a user-friendly generative AI application with authentication and deployment.

Let's personalize your content