Multi-LLM routing strategies for generative AI applications on AWS

AWS Machine Learning - AI

APRIL 9, 2025

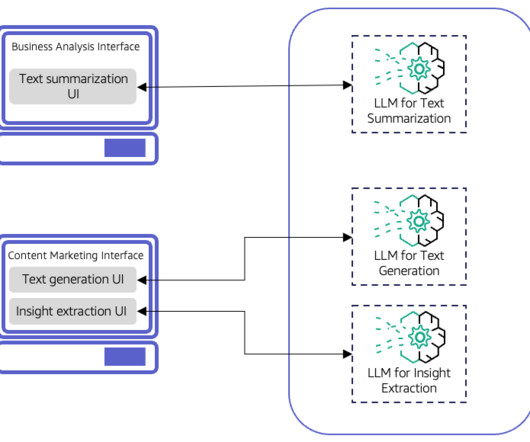

Organizations are increasingly using multiple large language models (LLMs) when building generative AI applications. Although an individual LLM can be highly capable, it might not optimally address a wide range of use cases or meet diverse performance requirements.

Let's personalize your content