Extend large language models powered by Amazon SageMaker AI using Model Context Protocol

AWS Machine Learning - AI

MAY 1, 2025

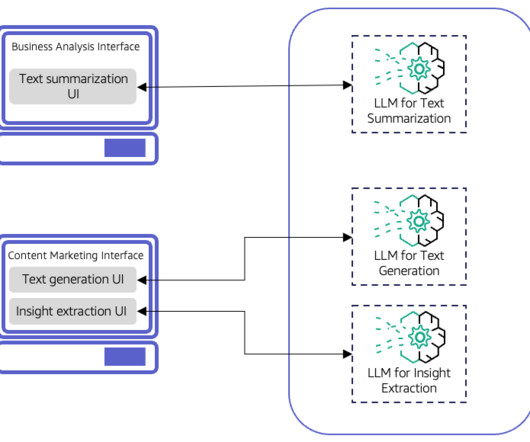

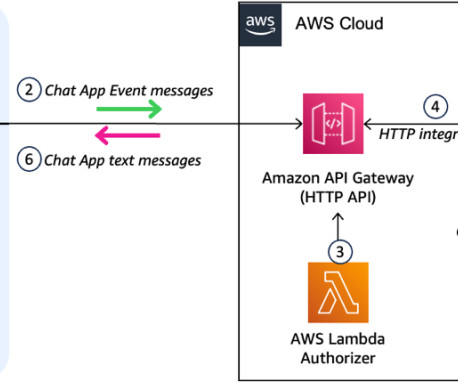

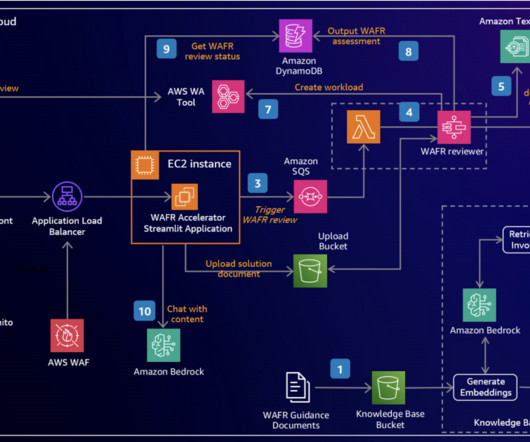

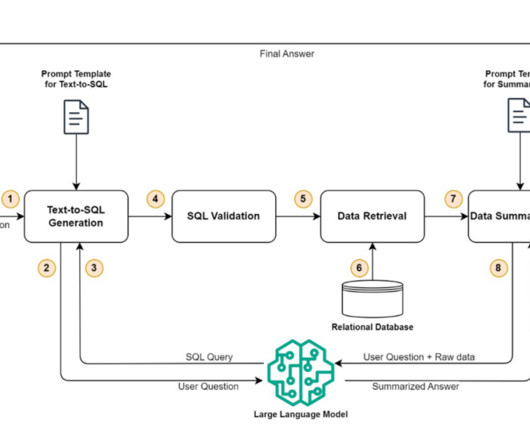

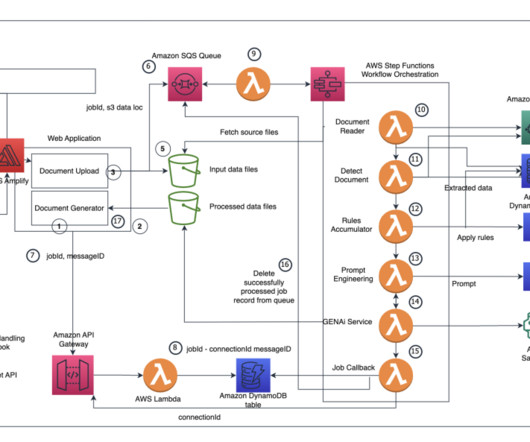

For MCP implementation, you need a scalable infrastructure to host these servers and an infrastructure to host the large language model (LLM), which will perform actions with the tools implemented by the MCP server. We will deep dive into the MCP architecture later in this post.

Let's personalize your content