Automate Amazon Bedrock batch inference: Building a scalable and efficient pipeline

AWS Machine Learning - AI

OCTOBER 29, 2024

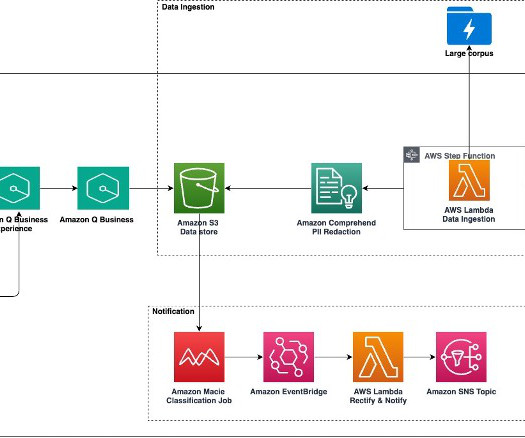

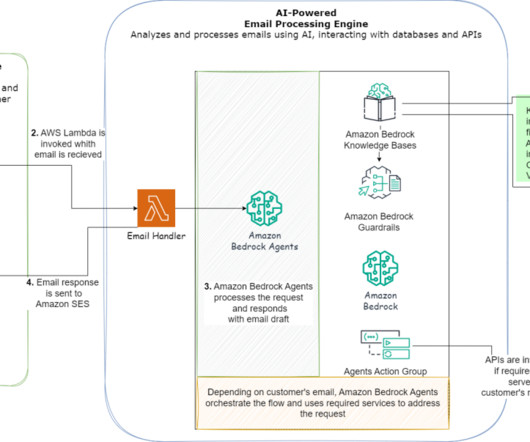

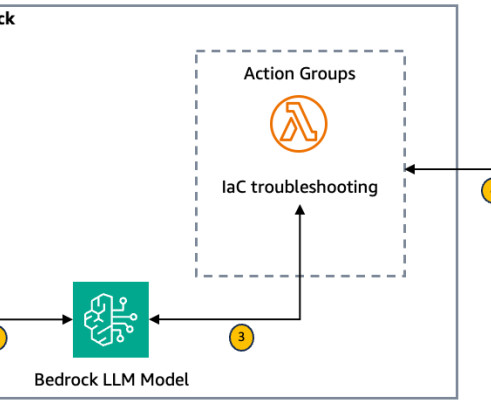

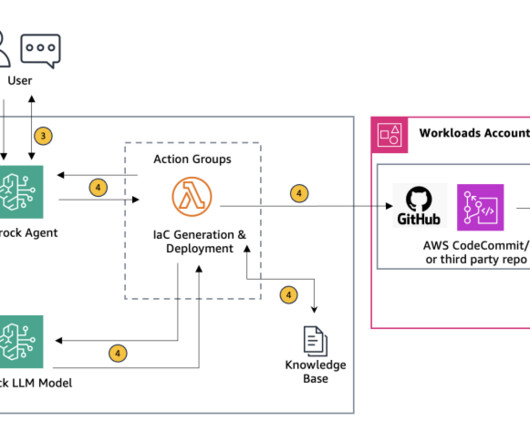

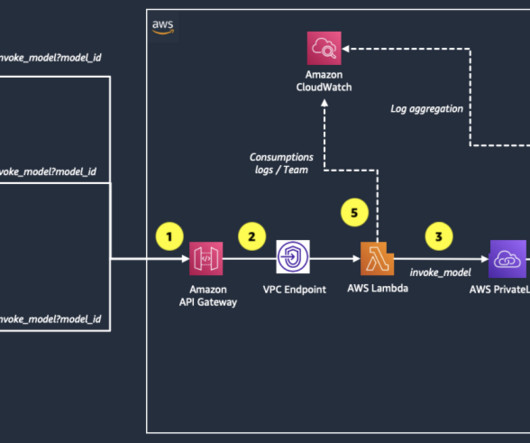

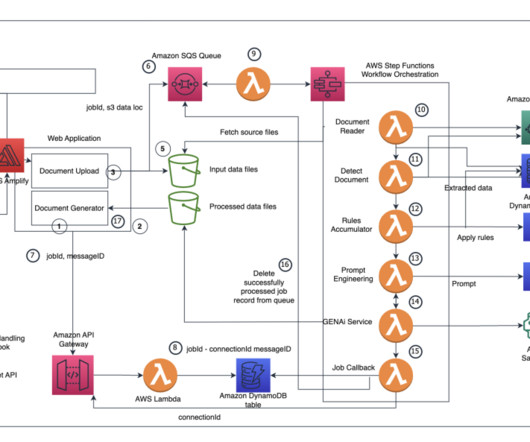

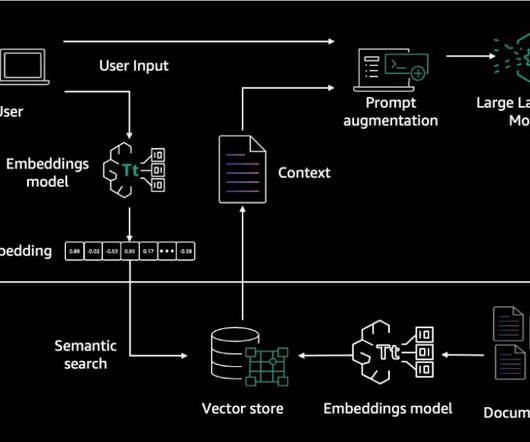

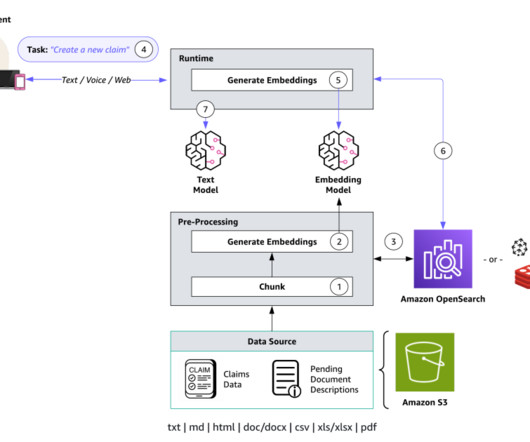

To address this consideration and enhance your use of batch inference, we’ve developed a scalable solution using AWS Lambda and Amazon DynamoDB. We walk you through our solution, detailing the core logic of the Lambda functions. Amazon S3 invokes the {stack_name}-create-batch-queue-{AWS-Region} Lambda function.

Let's personalize your content