Create a generative AI–powered custom Google Chat application using Amazon Bedrock

AWS Machine Learning - AI

OCTOBER 31, 2024

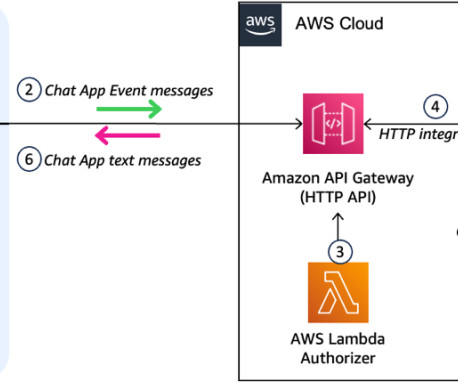

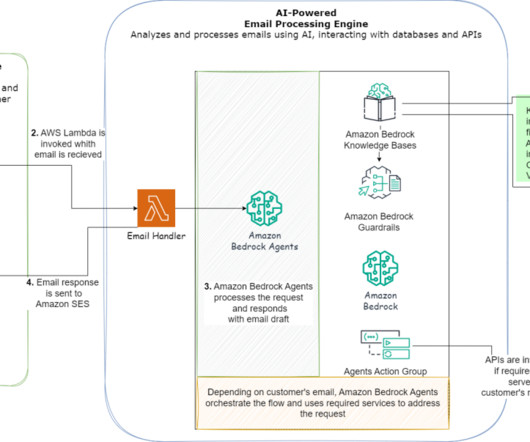

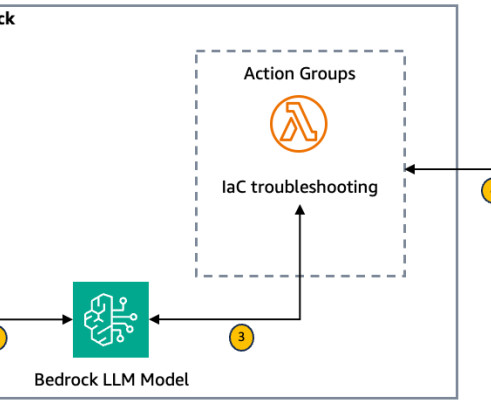

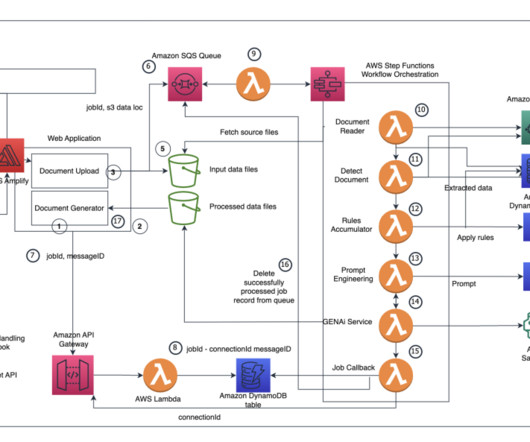

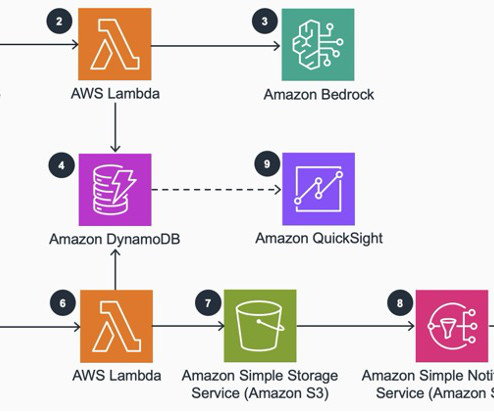

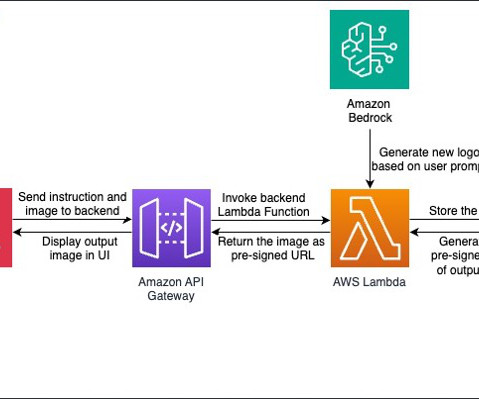

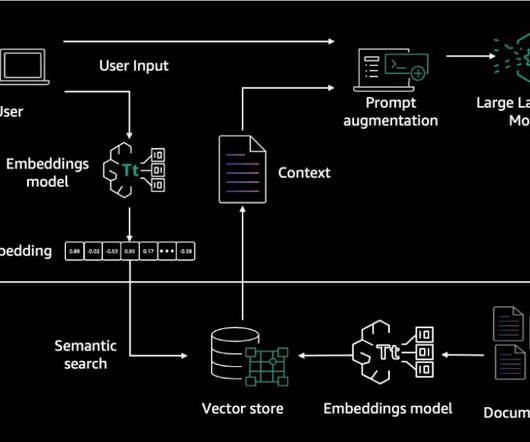

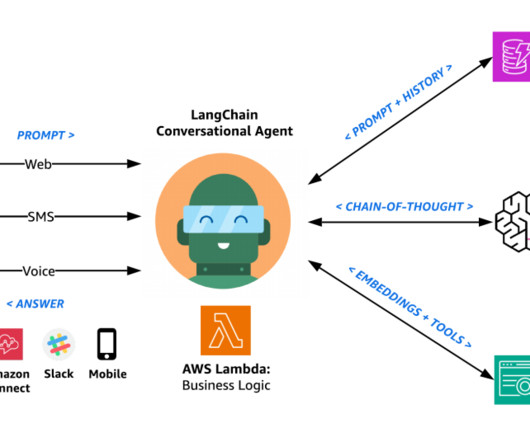

By implementing this architectural pattern, organizations that use Google Workspace can empower their workforce to access groundbreaking AI solutions powered by Amazon Web Services (AWS) and make informed decisions without leaving their collaboration tool. This request contains the user’s message and relevant metadata.

Let's personalize your content