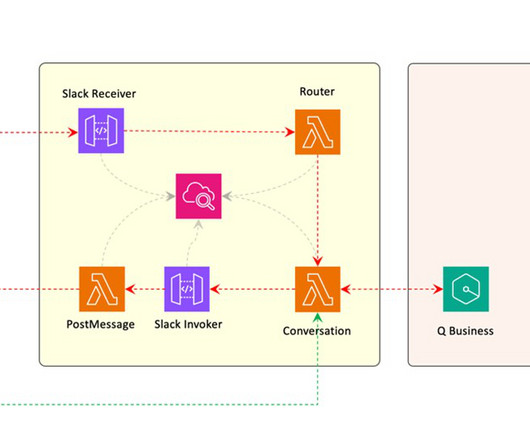

Multi-LLM routing strategies for generative AI applications on AWS

AWS Machine Learning - AI

APRIL 9, 2025

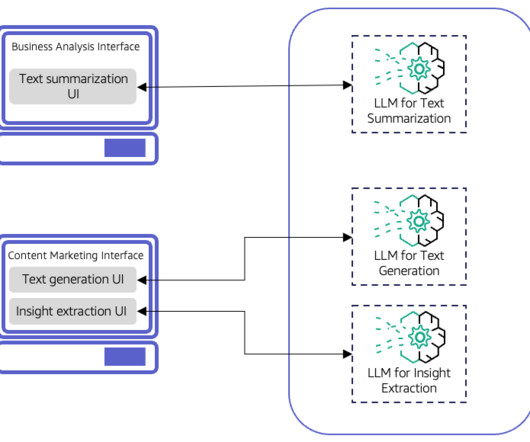

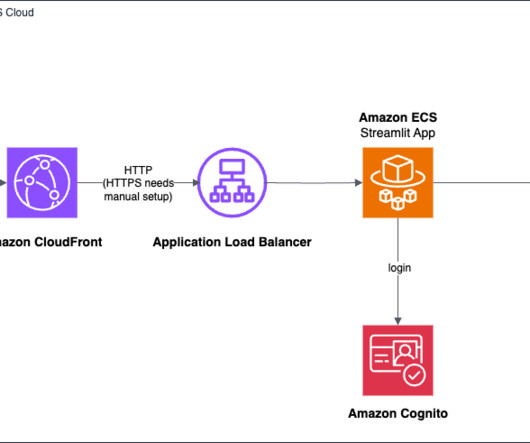

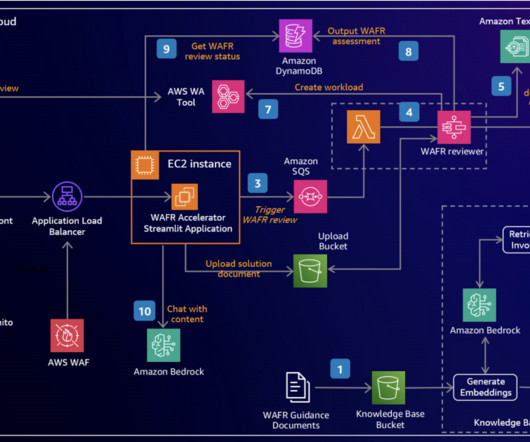

Organizations are increasingly using multiple large language models (LLMs) when building generative AI applications. This strategy results in more robust, versatile, and efficient applications that better serve diverse user needs and business objectives. In this post, we provide an overview of common multi-LLM applications.

Let's personalize your content