What is data architecture? A framework to manage data

CIO

DECEMBER 20, 2024

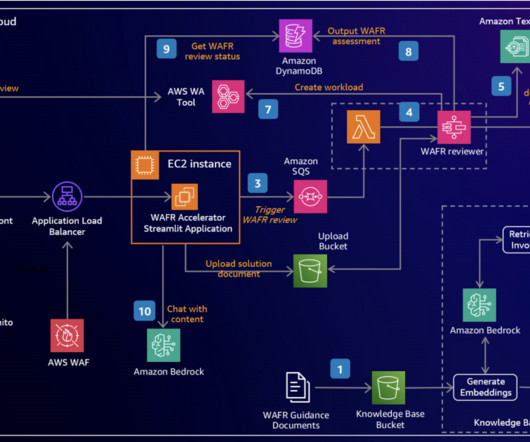

Its an offshoot of enterprise architecture that comprises the models, policies, rules, and standards that govern the collection, storage, arrangement, integration, and use of data in organizations. It includes data collection, refinement, storage, analysis, and delivery. Cloud storage. Establish a common vocabulary.

Let's personalize your content